Modelling beat tracking - a multi agent system

The following text describes my diploma thesis written 2004. The subject of this thesis is “computer based modelling of metrics in music”. In order to simulate metric perception, I implemented a beat tracking system based on multi agents. Furthermore, I would like to show you the GUI of my application and some examples for download.

Subject of my thesis

I would like to take this opportunity to present the results of my diploma thesis, because I think the results should be accessible to a wide public. If you like you can download the complete text as PDF file. The thesis is currently only available in German, please excuse me for that.

The subject of this work is the computer based detection of beat and metre in music, that means real time detection and generation of temporal regularities. For this purpose I have analysed the relevant models of musical perception and evaluated in terms of their suitability to be starting point of such an implementation.

After that I developed, extended and evaluated the promising approach of Simon Dixon, a multi agent system.

My thesis can basically be considered as a contribution to the subject synchronisation of man and machine, because the model and implementation of human music perception and production achieves two things:

- the perception of regular pulses in music (pattern recognition)

- the generation of consistent pulses with music (musical accompaniment)

In this context is the usage of empirical data as important as scientific thinking. The vast majority of this input data has been kindly donated by Henkjan Honing. They stem from an experiment, twelve pianists were invited to play two pieces “Yesterday” and “Michelle”. Both pieces have a relatively simple rhythmic structure and lend themselves to increase speed by varying the expression. Four of the players were jazz pianists, four professional pianist from the classical field and four beginners. The musicians had to play the songs three times in three different tempos, i.e. in normal, slow and fast tempo. The interpretations, whose total number is equal to 216 were played on a “Yamaha Disklavier Pro”, recorded with the “Opcode Vision” sequencer and saved as MIDI file.

The functionality of the system

At this point I would like to describe the functionality and usage of the programmed package “de.uos.fmt.musitech.beattracking”. As you may see is the package part of a music framework called musitech located at the “Research Department of Music and Media Technology“ of the University of Osnabrück.

The software was written by me in Java, and includes 30 classes with a total of about 20,000 lines of code. On a 1800 mhz computer claims the analysis of a MIDI file about 1.5 seconds for a Yesterday and 1.6 seconds for a Michelle interpretation. By the way the mean length of the interpretations is about 66 respectively 59 seconds. The real time version requires much more time for the processing of a interpretations. That means an average of 11.8 seconds for a Yesterday and 6.4 seconds for a Michelle interpretation.

![]()

UML-diagram of the real time system

Let us take a look at the java implementation. In real time mode the start of the beat tracking begins with creation of an instance “BeatTrackingSystem” or for offline processing with instantiation of a “BeatTrackerSystem”. In the following I would like to describe the real time mode. Its difference to the offline processing will be shown in a later paragraph.

Preprocessing and initiation

The preprocessing refers to classes of the Musitech project. The input data is handed over to the system as music.Container. This could be for example a notePoolof a music.Work object. This object can be created with the help of a MIDI file import and a midi.MidiReader

The aforementioned container creates a RhythmicEventSequence of RhythmicEvents. These events groups very close notes to one object. The maximum temporary distance is called mergeMargin

With the grouped notes are properties calculated like there mean onset, salience, length and velocity. This calculation is parameterized by velocityWeight, durationWeight, pitchWeight, pitchMin and pitchMax. These values define the influence of the several factors.

After adding the RhythmicEventSequence to the system a TempoInductionAgent and BeatTrackingManager are created. At this point is the initiation of the system finished and the method call trackBeat starts the beat tracking

Tempo induction and beat tracking

During the next step are all rhythmic events consecutively processed by the system. For each event happens the following:

- Each event is delivered to the TempoInductionAgent . This object is used for the creation of tempo hypotheses based on the temporal distance of the events.

- Every event is handed over to the BeatTrackingManager . The field currentTime holds the onset time. So the time between events can be calculated, the delta time. The BeatTrackingManager needs this value to score the tempo of each agent. The tempoScore is the product of its recent value and the parameter decayFactor to the power of deltaTime .

- Then the Agent’s trackBeats method triggers the beat processing by the beat tracking agent: The agent measures the time to wait for the next event. If it is too long, that mean greater the parameter expiryTime , the agent is marked as to be deleted. Besides that, the agent calculates an error, the difference between the onset of the event and the espected time. Occurs the event in a special time frame, specified with preMargin and postMargin, it is classify as beat. In that case the event is added to the agent history, the beatCount is incremented, and the beatInterval is adjusted. This fitting depends on the parameter correctionFactor .

- Additionally gets the agent a new phaseScore based on the old value, error, stress and the parameter conFactor , a kind of learning rate. But this approach prefers early created agents, because they have more time to increase the scoring of their phase and this approche do not decrease the score. For this reason was the phase calcucation of the real time version increased by the parameter phaseDecay , which gives younger agents a chance, because the decay fades the old scores.

- If the event only appears in the outer window, then a second agent is created, who ignores this event.

- Some agents have predicted the current event. There tempo scoring will be increased.

- After that the tempo score of all agent is calculated. The sum of the old value and the scoring of the beat interval is calculated by the tempo induction agent. Remember that the beat interval is nothing else than the tempo hypothesis of an agent.

- The beat tracking manager requests the best tempo hypothesis from the tempo induction agent. If there is no agent with best fitting tempo, the manager creates such agent and sets his scoring.

- But the scoring of the tempo happens a third time. The scoring is increased, when there are more agents with similar tempo hypotheses. That means there beat interval is nearly a integer divider or multiplier of the tempo to be scored.

- Finally the beat tracking manager detects the agent with the best tempo and phase score, and increased his to score time by lapsed time period. The top score time of the others is halved.

- After adding the agent to a list oft the best agents, the processing of the next event can start.

Difference between real time and offline version

Let us take a closer look at the offline beat tracking. The real time version tries to detect the tempo time by time with the help of a tempo induction agent. This agent handles a time window, which is moved through the song. By contrast, the offline version processes the tempo induction one time. The result is that the offline version clones only agents with different phases, means beat times, but no agents with different beat intervals.

The tempo induction agent is basically an array, which stores - like a histogram - the distribution of the “inter offset intervals” (IOIs). The IOIs are clustered in order to reduce the amount of data. But there is a second reduction, which implements a kind of forgetting. Old IOIs are not considered in the calculation of the clusters. Each cluster is specified by a relative and absolute time frame. Assigning a IOI to a cluster will not only increment the count of the cluster, it will also increment the adjacent clusters.

There is only a few differences between real time and offline version in the following processing of the rhythmic events through the agents. The real time version implements collaboration of the agents regarding to their beat interval. The offline version detects the best agent after processing of all rhythmic events, whereas the real time version detects the best agent after each event. In order to avoid ever-changing best agents the system favors already winning agents in the following way. The deciding factor is not the direct scoring, but, instead, the time elapsed while being the best agent. The so called top score time.

Let us take a look at the real system output. The detected beats are added as beat marker to a metrical time line object, which is part of the song representing object. If now the processed song is displayed as a piano roll, there are some vertical lines marking the beat.

Finally let me show you the whole process as pseudo code:

Real time version:

FOR each event E_j

TempoInductionAgent.add(E_j)

BeatTrackingManager.add(E_j)

BeatTrackingManager.update(TempoInductionAgent)

BeatTrackingManager.collaborate()

BeatTrackingManager.bestAgent()

END FOR

Offline version:

RhythmicEventSequence.tempoInduction()

FOR each event E_j

AgentList.beatTrack(E_j)

END FOR

AgentList.bestAgent()

Emphasis and metricity

The metric analysis inspects sequences of rhythmic events. First of all the velocity of each event is normalised. After that the local emphasis of an event is calculated with the help of its previous and following event. The computed values are in the range of 1 and -1. Interpolated values have no velocity, so there emphasis is always set to zero.

Each agent is escorted by a metric agent observing two metricity properties: salience and regularity. The third metrictiy property is calculated by the agent on itself, the coincidence. Finally the wighted sum of these properties calculates the agent’s metricity.

The evaluation of both versions is based on the Beatles interpretations and made by comparison of system output and expected results. These difference is calculated as cumulative error.

The user interface

The implemented java classes can not only be used in combination with other classes, but also as single application. For the purpose I have programmed a java swing based user interface. The following sections shows the usage of the user interface.

Main window

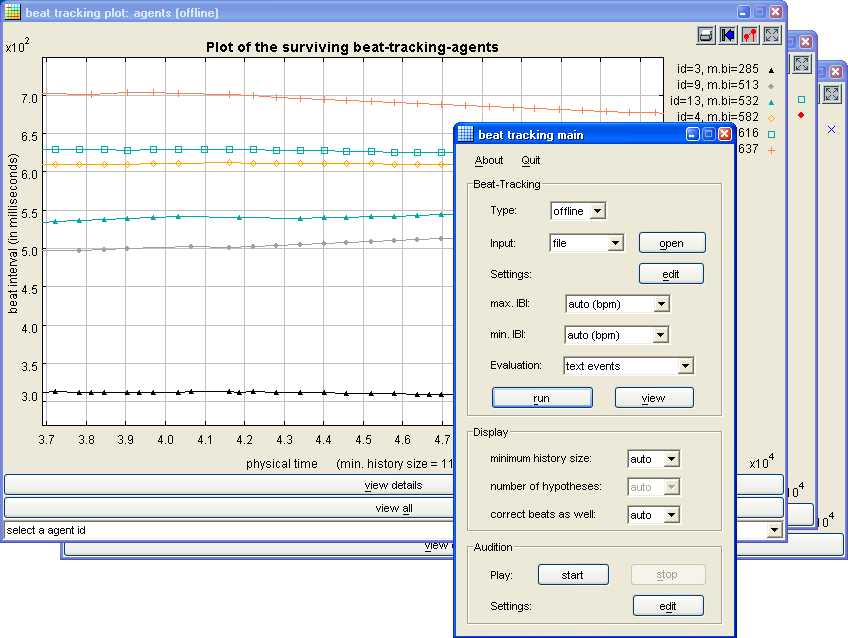

The picture below shows two windows of the application. The small window in the foreground is the main window for editing the essential settings. It is organised into three parts. The upper section holds the beat tracking and evaluation parameters. The button “edit” opens an XML editor. The middle part configures the result view. The lowest section controls the audio play back.

The large windows in the background show exemplary a graphic system output. Use the mouse zoom into the graph. There are three buttons at the bottom to trigger more actions.

XML-Editor

The XML editor offers the possibility to edit all system parameters and load saved settings.

![]()

3.3 Input window

The system input window displays loaded MIDI data. That means the salience of the rhythmic events through the time.

![]()

Output windows

The first system output window uses a piano roll and a table to show the rhythmic events and the detected beats.

![]()

The second output window displays the function of time and detected beat intervals.

![]()

The third windows shows the result of the metric analysis. That means the detected time signature, metric and begin of each bar.

![]()

Demos, downloads and sources

- Offline beat tracking for a Michelle interpretation

- Offline beat tracking for a Yesterday interpretation

- Online beat tracking for a Michelle interpretation

- Online beat tracking for a Yesterday interpretation

- Offline beat tracking for Walzer Nummer 15 from J. Brahms

- Online beat tracking for Walzer Nummer 15 from J. Brahms

What you hear is the audio input and the detected beat as click sound.

Don't hesitate to ask for the project’s source code. Just send me an email or ask the Musitech project.

Download my diploma thesis to get a full description of this project. Please, apologize that the thesis is only available in German.